One of the first projects I worked on at Automattic was a small A/B test for the first screen of the WordPress.com sign-up process. As the first screen in our funnel, it served a critical role, but it hadn’t been tested in quite some time. I tweaked the visual design and completely changed the copy for my first attempt at improving conversions on that screen. Setting it up and tracking the results felt really easy compared anything I had done before. I felt empowered and simultaneously discovered how projects work at Automattic.

That first test was a success and was implemented as the default for all our visitors. It remained that way for a little under a year as our priorities shifted to higher impact areas of our funnel. Little bits of feedback trickled in over time that kept me thinking about many possible improvements but it wasn’t until recently that I decided to take action.

The problem

One of the most common complaints I heard about our screen was that the terminology was confusing. We asked people what type of site they wanted and presented them with four options: a blog, a website, a portfolio, and an online store. These were some of the questions that kept coming up: what’s the difference between a website and a blog? can I change my mind later? two of these options sound good, what if I want both?

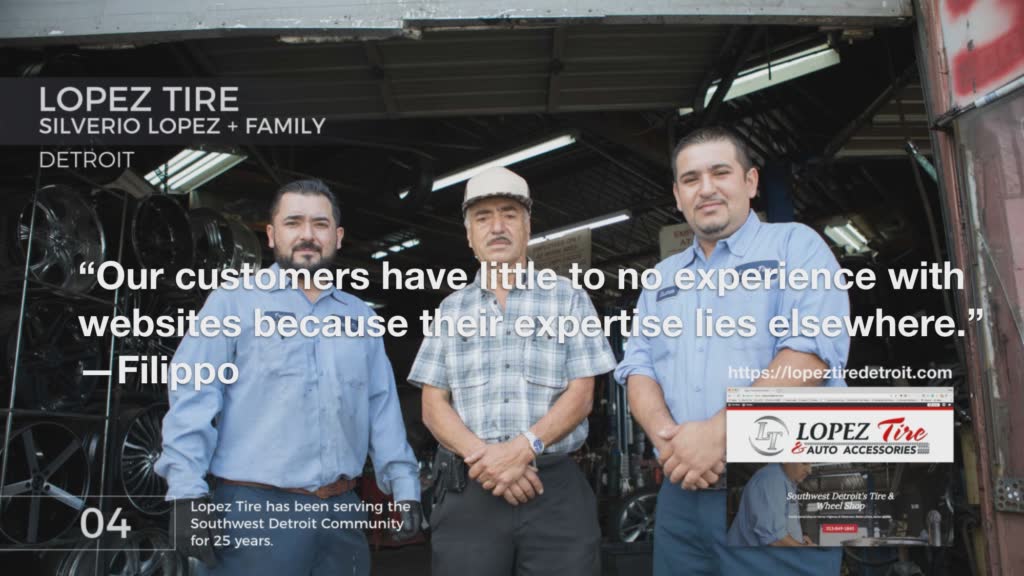

Our mistake was assuming people understood what to us seemed like simple concepts but for them were actually foreign. This made a lot of sense when I look back at all the polls I ran and conversations I had with our customers over the last year. I learned that our customers are not like us and that the majority of people signing up at WordPress.com had little to no experience building sites or using WordPress.

A new hope

Armed with this awareness about our customers, I reworked this screen to simulate a first meeting between a design agency and their client. The screen would ask them a series of questions to understand their needs, and then our tools would build a site that addressed their goals.

My biggest concerns with this approach were 1) not scaring people with too many questions so early in the signup flow, and 2) knowing which questions to ask. I quickly developed high level strategies to tackle both these problems. Designing with a mobile context in mind would ensure that I made it quick and easy for people to answer our questions and using a tool like Hotjar, I could quickly run a series of polls to validate and modify my questions.

Learning our customer’s vocabulary

One of my questions could be answered in a number of ways and therefore required an open text field to collect people’s responses. Of course, typing on a phone sucks, so I wanted to make the field as painless as possible. An autocomplete feature made the most sense as it offered people suggestions while they typed their answer. We could not only save people time from writing out the full word but we would also get cleaner data too. To pull this off, I needed a collection of commonly used terms to power the suggestions.

I created a new poll that included the question as it would appear in my design and quickly noticed the answers weren’t what I expected. Thanks to HotJar I was able to turn off the poll, rephrase the question, and launch the poll again within a matter of minutes — all without writing a single line of code. This time around I was happy with the data coming in and let the poll run until we had enough responses. After a couple days we had a lot of raw data to comb through so I used a spreadsheet and a PHP script to clean it up and help us make sense of it all.

Build, measure, learn

Another question asked people to pick their answer(s) from a handful of choices. I drafted a list of options based on data we had collected in the past and ran them in a poll along with an “other” field that allowed people to type their answer if couldn’t find what they were looking for in my list.

The first iteration of this poll was dominated by responses entered in the “other” field. Some of them were variations of what I had in my list but there were also lots of common responses that were not represented in my list at all. I used this data to update my list and then published another version of the poll. There were some decent improvements in the results but it was still not quite there yet so I rinsed and repeated. This time I got it right because the majority of the responses were selected from my list rather than entered into the “other” field. In addition to that, most of the people that did fill out the “other” field either flipped me the bird or just said hi.

Progress on multiple fronts

I wasted no time while these polls were running and started building the form right after the design was sketched out. The code was built in small chunks which made it easy to review and kept the momentum of the project going with visible progress. As the data came in, I added it to my work in progress until I had enough of a prototype to run some usability tests. The tests revealed some really minor tweaks that needed to happen but mostly confirmed that we were on the right path. Then, shortly after I completed my last poll, I had everything I needed to run my A/B test at scale and see how this new design stacked up against the old one.

The results

It didn’t take long for us to reach statistical significance but I still let the test run for at least seven days to account for any seasonality during the week. The results indicated that this design profoundly influenced our business metrics. Like it says in NEA’s Future of Design report:

Ultimately everything a design team does has consequences (both good and bad) for a business. The more you know about the business and its goals/intentions, the better a design team is positioned to deliver good experiences and results for the business.

— Tim Riley: Senior Director of Digital Experience at Warby Parker

This post also appeared on Automattic’s Design blog.